BuzzFeed was one of the first major publishers to adopt heavy AI publishing.

They drew scrutiny when a litany was plagiarized, copy-and-pasted, factually incorrect, awkward and simply poorly written.

Most recently, they’ve resorted to shutting down entire business units because of their inability to compete.

Sports Illustrated, also an early adopter, suffered from similar issues and is now also laying off staff to stem the bleeding.

Notice a pattern here?

Using AI to create content isn’t bad in and of itself.

But it often produces bad content. And that’s the problem.

This article dissects AI-generated articles and contrasts them with one crafted by a human expert to illustrate the potential pitfalls of relying solely on AI-generated content.

Why brands are flocking to AI-written content

Look. It makes sense.

AI’s promise is incredibly seductive.

Who wouldn’t want to automate or streamline or replace inefficiency?!

And I can’t think of a more inefficient process than sitting in front of a blank white screen and starting to type.

As a red-blooded capitalist, I empathize. However, as a long-term brand builder, I can also recognize that AI content just simply isn’t good enough.

The juice ain’t worth the squeeze.

Too many fundamental problems and issues still don’t make it viable to use for any serious, ambitious brand in a competitive space.

In the future? Sure, who knows? We’ll probably serve robot masters one day.

But right now, the only potential use case we’ve seen that makes any possible sense is around extremely black-and-white stuff.

You know the classic SEO playbook: Glossaries.

Straight plain, vanilla, top-of-funnel definitions.

Every SEO and their has heard about the “Great SEO Heist” – an infamously viral SEO story.

Now, I’m not going to kick someone while they’re down.

But I am going to kick the $#!& out of their content ‘cause it’s just not any good.

So let’s travel back in time for a second.

Let’s look up the warm, sunny days of Summer ‘23 when the brand-in-question ranked well using AI content. Then, let’s ignore the noise around it and rationally assess the content quality (or lack thereof).

Whoosh – top organic rankings from August ‘23:

What do you notice?

Tons of glossary-style, definition-based content.

Makes sense on the surface. The way LLMs work is by sucking in everything around them, understanding patterns and then regurgitating it back out.

So it should, in theory, be able to do a passable job at vomiting up black-and-white information.

Kinda hard to screw up. Right?

Especially when you understandably lower the bar and do not have any expectations for true insight or expertise shining through.

But here’s where it goes from bad to worse.

Problem 1: Top-of-the-funnel traffic doesn’t convert

This might sound like a trick question, but shouldn’t be:

Is the goal of SEO to drive eyeballs or buyers?

Ultimately, it’s both. You can’t drive buyers without eyeballs.

And you often can’t rank for the most commercial terms in your space without having a big site to begin with.

This Great SEO Catch-22 is why the Beachhead Principle is valuable.

But if you had to pick one? You’d pick buyers. You ultimately need conversions to scale into eight, nine and 10-figure revenues.

Now. There is a time and place for expanding top-of-the-funnel content, especially when you’re in scale mode and trying to reach people earlier in the buying cycle.

However, as a general rule, extremely top-of-the-funnel work won’t convert.

Like, ever.

In B2C? In low-dollar amounts, impulse or transactional purchases? Possibly. But still unlikely. It’d require one helluva Black Friday discount.

But B2B? Or any other big decision that often requires complex, consultative sales cycles that naturally take weeks and months of actual persuasion and credibility?

No chance. Here’s why.

Look up the Ahrefs example above, where one of the ranking keywords last summer was for “European **** Format.”

Now, let’s Google that query to see what we see:

That’s right, an instant answer!

Exhibit A: Zero-click SERPs.

So, the searcher can get the answer they want without ever having to click on the webpage in question.

Kinda hard to convert visitors when they don’t even need to visit your website in the first place.

Think this pervasive problem will only get better when more people start using AI tools to sidestep or augment traditional Google searches?

Think again.

Problem 2: Easy-to-come rankings are also easy-to-go

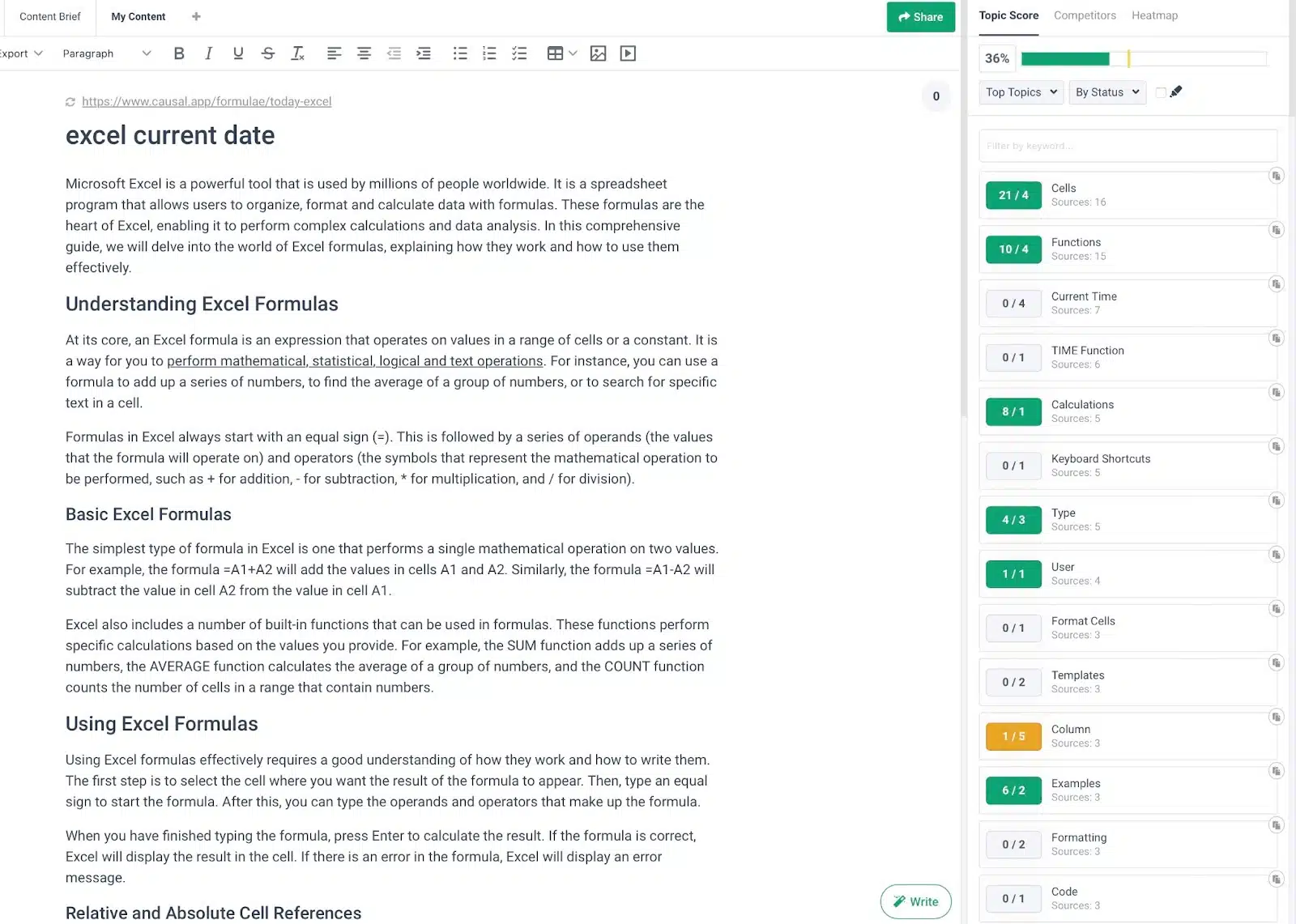

OK. Let’s look at another example.

The “shortcut to strikethrough” query was (at one point) the top traffic driver for this site.

So let’s dig deeper and unpack the competitiveness for a second.

All traditional measures of “keyword difficulty” are often biased toward the quality and quantity of referring domains to the individual pages ranking.

They often neglect or gloss over or simply avoid measuring anything around a site’s overall domain strength, their existing topical authority, content quality and a host of other important considerations.

(That’s why a balanced scorecard approach is more effective for judging ranking ability.)

But there are two big issues with the graph above:

Issue: Easy-to-rank queries are often easy to lose.

All you need is a half-decent competitor worth their salt to actually publish something good and put out the minimum amount of distribution effort and you’ll lose that ranking ASAP.

Contrast this to a definition-style article we did with Robinhood waaaaaaay back in 2019, that’s still ranking well to this very day…

… and that’s also competing against incredibly competitive competitors, too:

Good rankings only matter if you can hold onto them for years, not weeks!

Issue 2: Low-competition keywords are still low competition ‘cause there’s no $$$ in it!

Competition = money. The lack of competition in SEO, like in entrepreneurship, is usually a bad sign. Not a good one.

So, can you use AI content to pick up rankings for extremely top-of-the-funnel, low-competition keywords?

Technically, yes.

But are you likely to hang on to that ranking over the long term, while also actually generating business value from it?

No. You’re not.

Problem 3: AI content is (and always will be) poorly written

Fine. I’ll say it.

Most people aren’t good at writing. It’s a skill and a craft.

Sure, it’s subjective. But you learn some indisputable truths when you get good at it.

Here, I’ll give you one helpful tidbit to keep in the back of your mind.

How do you spot “good” vs. “bad” writing online?

Specificity.

Good writing is specific to the audience and, more importantly, the selected words and the context provided to bolster its claims.

Bad writing is generic. It’s surface level. It’s devoid of insight.

It sounds like a freelance writer wrote it instead of a bonafide expert on the topic.

And that’s why AI content manufactured by LLMs will always struggle in its current iteration.

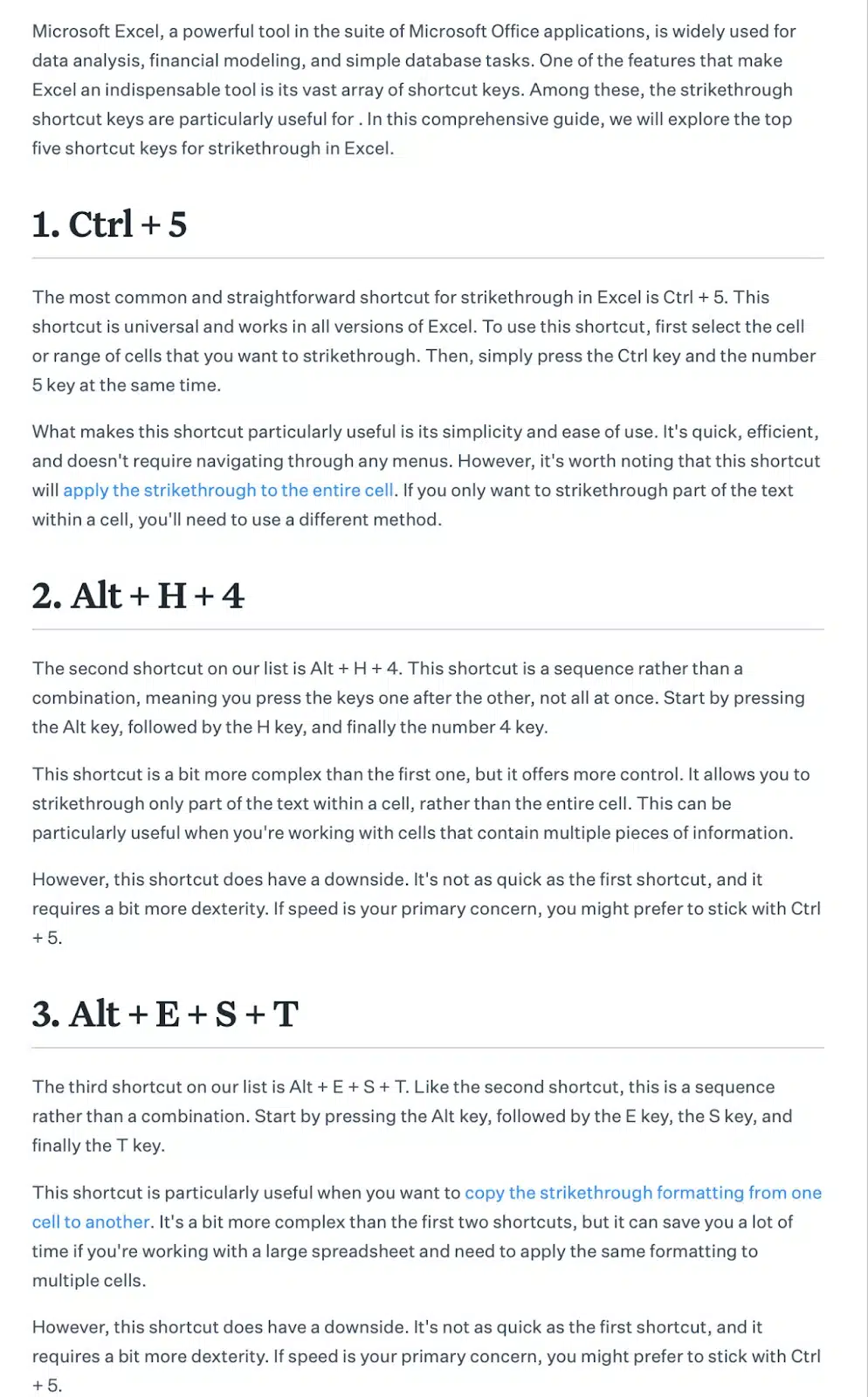

Again, let’s look at actual examples! (See? Specificity!)

That box in red above?

Any half-decent editor would just remove the entire thing ASAP. And probably question why this person is writing for them in the first place.

It says a lot without saying anything at all. Pure fluff.

Flaccid, impotent writing at its finest.

And the box in yellow? Slightly better. Barely, though.

At least it gives some actual examples. However, the problem with this section is twofold.

Again, the examples are extremely surface-level at best and sloppy at worst.

This is like when a teenager spouts off about something they just Googled two seconds ago, trying to make it sound like they know what they’re talking about now.

You know what it looks like when an amateur simply regurgitates what other people are saying vs. actually doing research and being knowledgeable about which they’re speaking?

It looks exactly like that.

More importantly, while it mentions a few “advanced Excel formulas,” it fails to actually describe any “advanced Excel formulas.”

That’s a problem! Because it’s supposed to be the entire point of this section!

Do you want to venture a guess as to why it’s failing to do that?

Because it doesn’t actually understand “advanced Excel formulas.”

By definition, LLMs (and bad, amateur writers alike) don’t actually understand what they’re writing about.

You can’t be specific about something if you don’t understand it in the first place.

AI content (and underlying LLMs) don’t understand how to associate different bits of knowledge together and then expertly knit arguments together to form a coherent narrative.

Now, I know what you’re thinking:

“OK, Mr. Smarty Pants. Show me an example of good writing in a definition article, then?”

Fine. I’ll see your bet and raise you.

Here’s the counter-example, showcasing actual fact-checked research into the centuries-old evolution of “checks and balances” across multiple cultures and civilizations through time.

Even if you knew what “checks and balances” were going into this, you undoubtedly just learned something about its evolution and context and now possess a greater understanding of the subject before you started reading.

Specificity, FTW!

Get the daily newsletter search marketers rely on.

Problem 4: AI content isn’t optimized well enough for search, either

Today, I have the privilege of working with smart, amazing brands.

But ~15-odd years ago? It was the opposite.

It used to drive me nuts when companies would think that SEO is this magical process where you come in at the very end of a new website or piece of content and sprinkle your SEO magic pixie dust on it, and all will be good.

And yet, fast forward to today, AI content often falls foul of the same logic.

Good “SEO” content today is engineered to be properly “optimized” from the very beginning.

It takes into account everything, including:

- The audience’s knowledge or pain points.

- True search intent.

- The overall structure and style of content.

- The structure and headers.

- Questions being answered.

- Related topics.

- Other relevant information on your site.

Exhibit C:

Once again, this is difficult to do well because it requires several experts to work together to determine how the vision and structure and execution of a piece looks before a single word is ever written.

AI content, on the other hand?

Sprinkle away!

Yes, you can prompt it. You can finesse it (kinda). You can try to add decent headers.

But then you’re often left with something that looks like this:

Length is fine. Headers and overall structure of content (based on SERP layout) are also fine.

But on-page optimization kinda sucks:

- Semantic keywords and related topics are slim and much lower than average competitors ranking for this query.

- How about internal links to reinforce your clusters and create a dense site hierarchy, improving topical authority around these subjects for the long haul? Also nonexistent.

- How about image alt attributes for accessibility? Oh wait, there’s no images. Nevermind.

- Or, little-to-no questions being answered, pain points being addressed, problems solved or related People also ask questions that Google will show you.

Like these:

This is the problem with shortcuts.

When you do things correctly, from the beginning, you can plan and be proactive and specifically structure things to provide yourself with the best possible chance to succeed.

But when you’re over-relying on the Ozempic of the content world (AI), you’re forced to take shortcuts because of the self-imposed limitations.

The output is worse for it.

Problem 5: AI content mansplains – good writing imparts understanding

Specificity is a hallmark of good writing because it lets the reader know they’re immediately understood and provides insight that actually informs how they think.

AI and poor writing, in general, mansplains.

It offers up generic crap that readers already know.

And this simple difference is also why visuals make such a giant difference online.

You shouldn’t have images in an article because it’s a dumb requirement before publishing.

Your checklist says “one image per 300 words.” Check, marked.

A generic stock image might as well not even be included.

No, the real reason images are critical is because they shape the actual narrative!

All of these words I’m typing before and after each image add context to the examples being shown that bolster my claims.

That way, I gain credibility. (We’ll come back to this below.)

And because I can back up my claims, you know I’m not just spouting B.S.

So once again, let’s look at this entirely text-only AI article (even when discussing a visual concept):

Meh. The writing still sucks.

But more importantly, AI can’t weave a connection between images and text. ‘Cause that still requires nuance and context (which it entirely lacks).

Let’s contrast and compare that with the takeaway below, which does three important things AI + LLMs can’t do:

- Provides a unique or novel simile for what “checks and balances” are and how they work.

- Gives readers a shorthand of sorts, a mental leap in logic, to help them immediately understand the dynamic nature and tension of this intangible concept.

- Shows a visualization that backs up the simile so that the sum of this section is greater than its parts.

AI, by contrast, could only hope or dream of doing this – if it outright copied this exact article.

This expertly brings us to the next point below.

Problem 6: AI content is basically plagiarism

I mean, this one should be obvious by now.

Once again, LLMs – by definition – are essentially a form of “indirect” plagiarism. It’s just re-sorting words together that most often appear in relation.

Look up any of the current lawsuits to see why authors, for instance, might upset that their copyrighted intellectual properties are being used to train these ******.

Typically, you’ll find that even bad amateur writers aren’t often stupid enough to “directly” plagiarize something. Just copy and paste other sources and pretend like it didn’t happen.

But they’ll do what LLMs are doing, simply Googling the top few results and then rehashing or recycling what they see.

Let’s plug one of these articles into Grammarly then to see how it shakes out:

Not great. Not even good.

Yet again, the strengths of how LLMs work are also their greatest weakness, like some uber-nerdy form of jiu-jitsu.

This article in question kinda, sorta sounds like a bunch of other pre-existing academic journals – because the freaking model was trained on these same academic sources.

“Good” SEO content should be:

- Interesting.

- Memorable.

- Branded.

- Useful.

- Insightful.

- Entertaining.

Kinda hard to do that when you’re just recycling pre-existing content out there!

If a writer turned in an article to us with ~14%+ plagiarism, they’d be fired on the spot.

How should Grammarly look when you check for plagiarism? Like this, clean as a whistle.

Dig deeper: How to prevent AI from taking your content

Problem 7: Buyers buy from trusted brands, requiring credibility, something AI content lacks entirely

Like any good narrative, let’s finish where we started.

End at the beginning. (And yet another thing AI can’t do!)

Y’all know about E-E-A-T. We don’t need to retread old territory – no AI mansplaining necessary.

Google has already warned/told you they value credibility.

But what if we back up a second?

- Which sources will Alexa pluck from?

- Who is OpenAI going to copy and paste, first?

- Which Quora posts get the most upvotes?

That’s right. The best answers and the most thorough replies!

These are typically produced by some expert.

That’s ‘cause expertise builds credibility. And credibility or trust is ultimately why people decide to part with their hard-earned green with you vs. your competitors.

What hallmarks of credibility in content today that AI content completely lacks?

- The actual writer themself is an expert writing from years of first-hand experience.

- Expert quotes are sourced and used to bolster individual claims being made.

- Third-party stats and links from reputable sources can either support arguments or provide counterexamples to uncover any potential bias and show the other side of an argument.

- And the damn thing is fact-checked by at least a second (if not third) pair of eyes to actually prove the points are factual vs. falsehoods.

True credibility has nothing to do with putting a fake doctor’s byline on your AI article and calling it a day.

It’s like when your partner gets mad because you lied. Not because of what you said but because of what you didn’t.

A lie by omission is still a lie, at least in ***** land.

The most successful, profitable companies today are run by adults working well together, pulling in the same direction over the years to build a memorable, differentiated, meaningful brand that will stand the test of time.

Not by grasping at straws, looking for shortcuts and silver bullets or phoning in with the bare minimum possible, then acting surprised when it doesn’t work, leading to entire teams being laid off or divisions shut down.

Shortcuts might work over the short term.

You might pick up a few rankings here or there for a few months. Maybe even a year or two.

But will it deliver sustainable growth five or 10 years from now?

Just ask BuzzFeed or Sports Illustrated where a race to the bottom ultimately leads you.

Is SEO content an expense or an investment?

All of this begs the million-dollar question:

Is SEO content an “expense” or an “asset”?

Is “content” just an expense line on the P&L, to reduce it and minimize it as much as possible so it costs you the least?

Or, if done well, could it be an “asset” on the balance sheet, with a defined payback period, creating a defensible marketing moat that will produce a flywheel of future ROI that only grows exponentially over the long term?

Working with hundreds of brands over the past decade has shown me that there’s often a 50-50 split on this decision.

But it’s often also the one that is the best indicator of future SEO success.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Source link : Searchengineland.com