PPC best practices come from a variety of places. Some of those sources are:

- Google Ads reps.

- The Help Center.

- Official certifications.

- Auto-apply and manual recommendations.

- Ad strength recommendations.

- And even automated assets to an extent.

However, depending on those sources, you could end up with highly different answers. So, how do you know when to apply or be critical of a “best practice”?

Many PPC professionals can relate: You see “Google” on your caller ID, and you momentarily feel a boost in ego.

But reality sets in when you realize it’s often a junior Google representative or even a third-party service who needs to tick some boxes to meet quarterly goals, not necessarily tailored to your account’s context. This conflict of interest makes whatever “best practice” they recommend risky.

In the end, you hang up and realize our role as advertisers is to make sure we deliver the highest results with the smallest budget possible. And Google Ads’ role (or any other ad network) is to get you to spend more.

So naturally, our relationship with Google Ads’ best practices has to be balanced. Let’s explore the most common pitfalls and also recognize when Google Ads does a fantastic job.

Leverage automated bidding

There are probably just a few people left who can still successfully run manual CPC campaigns – and that’s a good thing. Look, I know the PPC community is angry at Google for raising ad prices, and some even go as far as saying that automated bidding strategies are only meant for having advertisers spend more.

But still, anyone who ran proper A/B tests back in the day knows that correctly set up automated bidding strategies outperform manual bidding 99% of the time.

I’m happy Google Ads rolled them out and made a great tool almost 10 years ago. It’s a best practice you can apply almost every time.

Why do I say “almost” though? If Google Ads could clarify optimal setups (conversion density, latency, frequency, etc.), it would be perfect. Right now, the only thing we have is:

“When you have little to no conversion data available, Smart Bidding can still use query-level data beyond your bid strategy to build more accurate initial conversion rate ******.”

– “How our bidding algorithms learn,” Google Ads Help

So if you feel like your automated bid strategy doesn’t quite perform as you’d like to, review the above parameters, but otherwise, you most probably should move away from manual CPC.

Grow with broad match keywords

Just like automated bidding, automated targeting (that’s pretty much what broad match is these days) is getting really good. As such, I believe Google Ads is right to push those broad match types harder.

There are plenty of studies showing how broad match keywords outperform phrase match keywords. It certainly helps speed up PPC staff training and streamline campaign maintenance with lighter campaign structures. So what’s not to like?

Similar to automated bidding, though, I would still caution against using such a match type blindly. For example, I strongly advise against turning on:

- The “Grow your Smart Bidding campaigns with broad match” auto-apply recommendation.

- The broad match keywords campaign setting.

Why is that? Because just like automated bidding, Google Ads hasn’t shared details on optimal setups.

By experience, you want to fuel your bid strategy with some initial down funnel data (purchases) before trusting Google’s AI. Otherwise, just like any AI, it will produce superficial results because it will not see past the top-of-funnel KPIs (pageviews, etc.).

Another recurring theme is the progressive removal of information. As usual, these days, citing privacy allowed Google to limit search term reporting.

While performance is definitely getting better with broad match keywords, visibility isn’t. A shame since search term reports helped better inform holistic marketing decisions.

Growing with broad match keywords is an interesting best practice. But you should be aware of its limitations.

Get the daily newsletter search marketers rely on.

<input type="hidden" name="utmMedium" value="“>

<input type="hidden" name="utmCampaign" value="“>

<input type="hidden" name="utmSource" value="“>

<input type="hidden" name="utmContent" value="“>

<input type="hidden" name="pageLink" value="“>

<input type="hidden" name="ipAddress" value="“>

Processing…Please wait.

See terms.

function getCookie(cname) {

let name = cname + “=”;

let decodedCookie = decodeURIComponent(document.cookie);

let ca = decodedCookie.split(‘;’);

for(let i = 0; i <ca.length; i++) {

let c = ca[i];

while (c.charAt(0) == ' ') {

c = c.substring(1);

}

if (c.indexOf(name) == 0) {

return c.substring(name.length, c.length);

}

}

return "";

}

document.getElementById('munchkinCookieInline').value = getCookie('_mkto_trk');

Upgrade to data-driven attribution

You start to notice a theme right? Data-driven attribution (DDA) is yet another AI-driven innovation from Google. And just like the previous best practices, it sure has value. But also limitations.

Perhaps most importantly, DDA shows marketers that cross-channel journeys actually happen. By nature, distributing conversions across several audiences improves overall performance since it’s more granular and less angular.

However, I believe Google strongly lacks transparency on this one (I mean, even more than for auto bidding and broad match types).

Indeed, you cannot see conversion paths per user cohort. You used to be able to compare DDA to other attribution ******, but that’s now gone.

The only other option we’re left with is last-click attribution which is notoriously simplistic (depending on your purchasing journey).

In the end, it’s a great feature, but it shows the early signs of Google’s dark side: it doesn’t care about your context and thinks poorly of you, the advertiser. Don’t get me wrong, I **** processing and automating stuff whenever possible. And AI is a fantastic tool.

However, thinking that everything is measurable is a sin, even in data marketing.

What if your purchasing journey isn’t correctly reflected in data available to Google Ads’ algorithms for whatever reason? What will DDA base its conversion distribution on? Your guess is as good as mine.

So while I believe most advertisers should follow this best practice, I believe we should all be very much aware of its limitations – and cross-reference DDA results with other attribution or incrementality results.

Adopt Performance Max campaigns

This best practice aligns with broad match keywords, DDA and automated bidding. But it goes even further: the promise is to identify the perfect media mix for you, thanks to Google’s AI. The shocking part is that it can totally deliver.

So why am I ranking that best practice with an orange traffic light?

Because:

- Most advertisers are simply not ready to embrace such a tool.

- Tracking is too often limited to revenue for ecommerce clients and MQLs for lead gen clients – no sense of LTV or profits.

Another hole in advertisers’ toolkits often can be found in data pipelines:

- Conversion volumes are too weak.

- Freshness doesn’t mean a thing to traffic managers.

- Frequency is a nice-to-have instead of a must-have.

Ultimately, Google Ads’ Performance Max algorithms will deliver impressions approximately.

Remember: their output can only be as good as the input you feed it. Do you think your setup is strong enough to feed such a beast?

So when your favorite Google Ads’ rep tells you to switch everything to Performance Max, think again. Do you tick all these boxes:

- Do I feed Google Ads with revenue data, at the very least?

- Do I have a purchasing journey that’s short enough?

- Is my business’ current media mix mostly based on non-branded and non-retargeting traffic?

- Am I ready to give up on lots of valuable marketing insights?

There are naturally a lot of other questions you could ask yourself, but those are the main ones to me. Adopting Performance Max is not a straightforward best practice, as you can see.

Being critical: Best practices vs. context

Best practices work most of the time, but not all the time. Just like averaged metrics, they can hide crazy standard deviations.

So remain critical with best practices depending on your account knowledge. More often than not, that context is challenging, if not impossible, for AI to grasp fully. And here lies your true value.

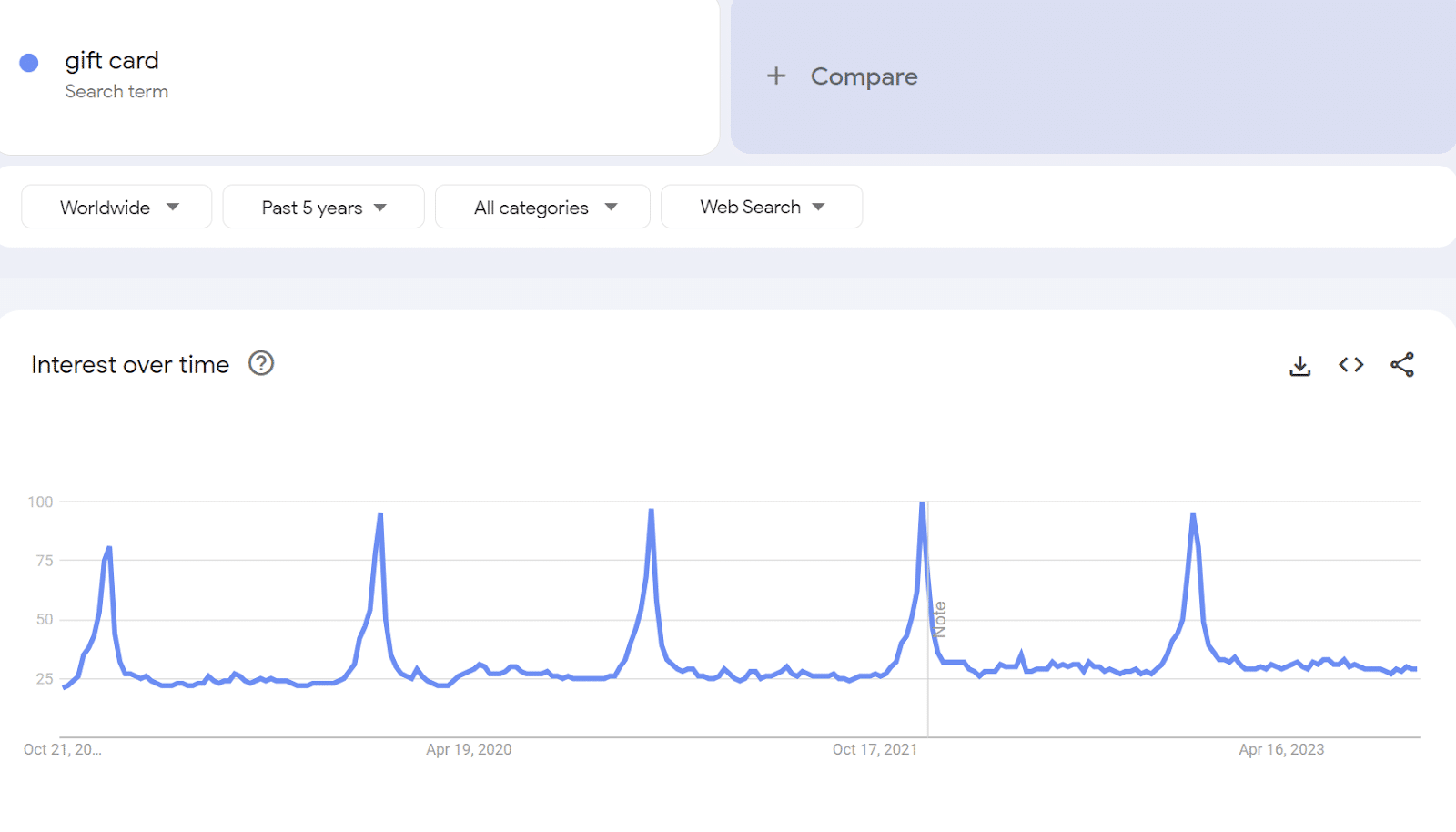

Let me give a few examples. Let’s say you run a gift card business. You probably know that it’s a very seasonal market, and the customer journey is crazy fast.

People who are late buying Christmas gifts will Google “gift card” a couple of days before Christmas – and buy straight away.

In that case, does it make sense to use DDA? Probably not.

Does it make sense to use Performance Max? Probably not, either.

Those prospects will use search mainly. That’s it. That’s the context driving your marketing strategy. It’s not a one-size-fits-all best practice telling you to act blindly.

Here’s another example: say you run a subscription-based business. Would it make sense to retarget Website visitors and saturate branded keywords?

Probably not. Those users will mostly be paying customers already.

So, would it make sense to use Performance Max with a bottom-of-funnel goal (something like a subscription)? Probably not, because Performance Max would go crazy on branded and retargeting campaigns. And those would not have great incremental value.

It’s crucial for advertisers to critically assess PPC strategies. Running a data marketing agency, I emphasize that strategies shouldn’t rely solely on data.

Don’t feel obligated to strictly follow Google Ads or other ad networks’ best practices, especially if their main pitch is being “AI-infused.”

The post Google Ads best practices: The good, the bad and the balancing act appeared first on Search Engine Land.

Source link : Searchengineland.com